The Strategy Bridge: From KPIs to Strategies That Actually Work

Turn your KPIs from a quarterly deck ornament into a systematic product strategy-generating machine.

This is the second post in a series about turning metrics into action. Part 1 covered building KPI trees that pass the Tuesday Afternoon Test. You can read both separately but best to leverage both concepts to get the best outcomes.

Thanks for reading RoadToPM! make sure to subscribe to stay up-to-date ✨✨✨

Your KPI tree shows that 40% of users churn in their first month because they “can’t find the features they need”.

Your team nods knowingly in the quarterly business review.

Someone suggests “improving discoverability”.

Everyone agrees.

The meeting ends.

Three months later, the metric hasn’t moved.

This happens because somewhere between “here’s what we need to measure” and “here’s what we’re shipping next sprint” there’s a massive gap.

We call it strategy, but most teams treat it like magic; throw enough brainstorming sessions at the problem and hopefully something sticks.

I’ve watched dozens of teams build perfect KPI trees that never generate a single useful decision. The tree sits in a deck, gets referenced in planning meetings, maybe gets updated once a quarter. Meanwhile, the team keeps shipping features based on whoever argued loudest in the last roadmap discussion.

The real problem? We’ve gotten good at building KPI trees but terrible at turning them into strategies. We have this diagnostic tool that tells us exactly what to measure, but no systematic way to figure out what to actually do about it.

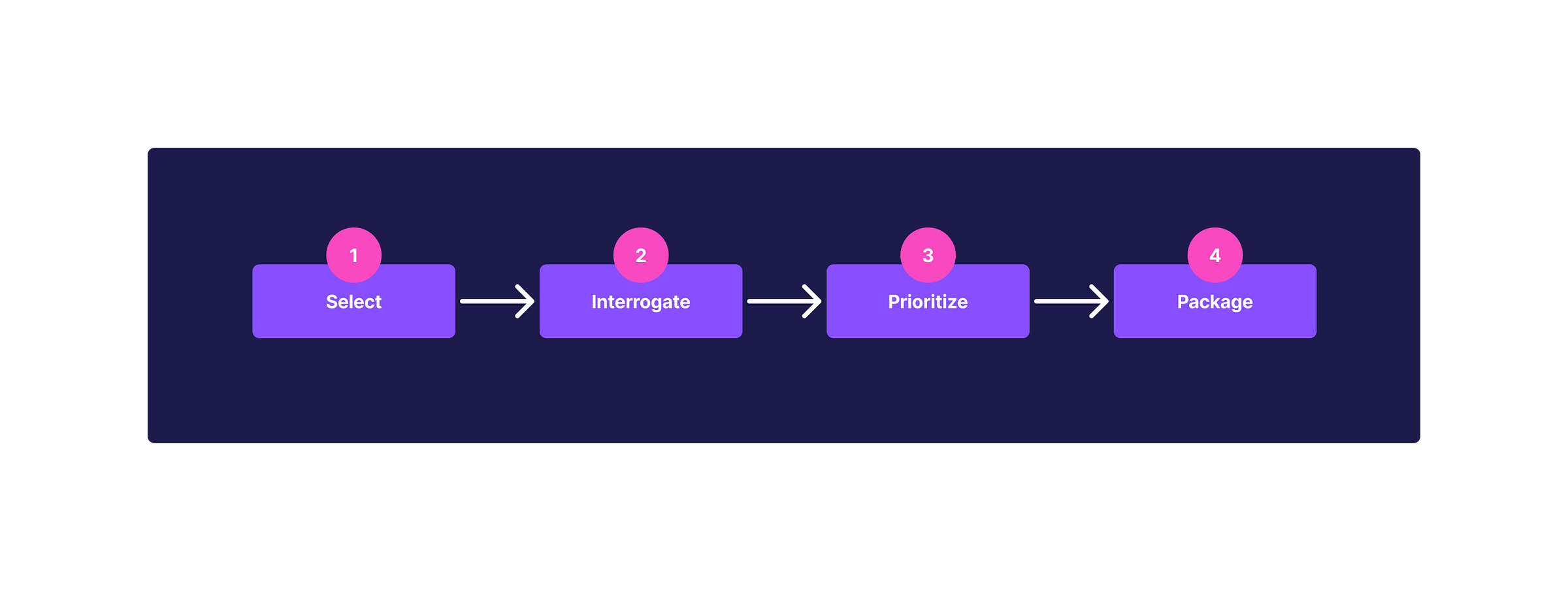

The Strategy Bridge Framework

The Strategy Bridge transforms your KPI tree from a measurement tool into an action-generating machine.

The core insight: every strategy is just a hypothesis about moving a specific part of your KPI tree.

Phase 1: Node Selection

You can’t interrogate your entire KPI tree.

Pick 1-2 driver metrics or 3-4 input metrics where you have the most leverage.

Two criteria:

High Impact: Moving this metric meaningfully affects your North Star

Direct Influence: Your team can actually take actions that affect it

Skip purely lagging indicators or metrics outside your team’s control.

💡 Types of metrics Driver metrics sit in the middle of your KPI tree. They’re the 2-3 key behaviors or outcomes that directly move your North Star. Think “Weekly Active Users” or “Feature Adoption Rate”. They’re not your ultimate goal, but they’re the critical path to getting there.

Input metrics are one level deeper. The specific, controllable actions that feed into drivers. If your driver metric is “Feature Adoption Rate”, your input metrics might be “Onboarding Completion”, “In-App Tutorial Views” or “Feature Discovery Click-Through Rate”.

The tradeoff: Driver metrics have bigger impact but less direct control. Input metrics give you more levers to pull but require connecting more dots to see North Star movement. Pick drivers when you need breakthrough impact. Pick inputs when you need quick wins or when your drivers are stuck and you need to work at a more granular level.

Phase 2: Node Interrogation

For each selected node, ask the Influence Question: “What are 3-5 things that could realistically move this metric in the next quarter?”

Don’t overthink it.

You’re looking for testable hypotheses, not perfect answers.

Example: Your driver metric is “Advanced Search Feature Adoption”. Exit surveys reveal that 40% of first-month churners say your product “doesn’t have the search functionality I need”.

Plot twist: Your product has advanced search. It’s buried three clicks deep.

Users are literally canceling paid subscriptions because they can’t find a feature that already exists.

Your influence hypotheses then might be:

Adding a prominent search bar to the main dashboard

Including advanced search in the onboarding tutorial

Sending day-2 emails highlighting search capabilities

Adding contextual search hints when users browse large datasets

Creating a “power user shortcuts” tooltip that surfaces during frustration moments

Phase 3: Hypothesis Prioritization

Most PMs stop here or try to do everything at once. Both fail.

Rate each hypothesis on three dimensions:

Impact: How much could this move the metric? (1-5)

Confidence: How sure are we this will work? (1-5)

Effort: How much work to test? (1-5)

The winners aren’t the highest impact; they’re the best Impact × Confidence ÷ Effort ratio. This is called the ICE Framework.

Phase 4: Hypothesis Packaging

Most frameworks fall apart here. They give you prioritized ideas but no way to turn them into strategies.

Each strategy needs:

The Hypothesis: “We believe that [specific action] will move [specific metric] by [approximate amount] because [our reasoning]”

The Test: How we’ll validate this hypothesis in the smallest way possible

The Scale Plan: If the test works, how we’ll roll it out

The Success Criteria: Exactly what metrics need to move to consider this successful or what are the outcomes we’re expecting

For the Advanced Search adoption example, a strategic initiative might look like:

Hypothesis: “We believe that adding a persistent search bar to the main dashboard will increase Advanced Search adoption by 40% and reduce first-month churn by 15% because users currently abandon our product believing we lack search functionality they specifically need”.

Test: A/B test with 50% of new users seeing the prominent search bar, measuring both feature usage and 30-day churn rates.

Scale Plan: If test increases search adoption by 25%+ and reduces churn by 10%+, roll out globally based on user behavior patterns.

Success Criteria: Advanced Search adoption increases from 20% to 30%+ within first week and first-month churn decreases from 40% to 35% or lower.

Making It Systematic

The Strategy Bridge works at every level of your KPI tree.

Stuck on your North Star?

Use Node Interrogation to identify which supporting metrics have the most potential, then dive deeper.

Driver metrics not moving?

Work down to the input metrics where you have direct control.

Here’s what this looks like in practice:

B2B SaaS: A team struggling with “Active Workspace Usage” discovered their driver metric “Cross-Team Collaboration Events” barely registered in the first 30 days. Node Interrogation revealed that teams invited colleagues, but those invited users rarely engaged because they weren’t shown relevant shared content during onboarding. Their hypothesis: Surface 3-5 workspace items the inviter recently edited during the new user’s first session. Test result: 3x increase in invited user activation, 40% lift in workspace retention.

E-commerce: Another PM working on “Customer Lifetime Value” noticed their “Second Purchase Rate” was abysmal. Instead of generic retention campaigns, they interrogated the metric and found that customers who bought from 2+ categories had 5x higher repeat rates. Their hypothesis: Show complementary category recommendations immediately after first purchase. Test result: Second purchase rate increased 60% in the test segment.

Fintech: A team fixated on “Loan Application Completion” was shipping UI improvements with minimal end user impact visible. Node Interrogation on “Document Upload Success” revealed users abandoned during bank statement uploads. Their hypothesis wasn’t better UI; it was letting users link bank accounts directly instead. Test result: Completion rate jumped from 45% to 78%.

The pattern? Each team had metrics telling them something was broken, but no systematic way to turn that knowledge into testable strategies. The Strategy Bridge gives you a repeatable process.

The key is treating each strategic initiative as a hypothesis you can test, not a commitment you have to see through. Most hypotheses will be wrong, that’s expected. The goal is to systematically test your way to strategies that actually work.

Where Most PMs Go Wrong

I’ve seen teams derail this process in predictable ways:

Skipping Node Selection: Trying to generate strategies for every metric in your KPI tree. You end up with 47 half-baked ideas and no clarity about what matters. Pick 1-2 nodes maximum. Do those well.

Confusing Hypotheses with Solutions: Writing “improve onboarding” instead of “we believe adding a 3-step tutorial will increase Day 7 activation by 25% because users currently abandon when they hit the blank dashboard”. Vague strategies produce vague results.

Optimizing for Completeness: Spending three weeks researching every possible influence factor before testing anything. The Strategy Bridge isn’t about finding the perfect hypothesis, it’s about systematically testing your way to what works. Ship your first test within a week or you’re doing it wrong.

Ignoring the Effort Variable: Prioritizing high-impact hypotheses that require six months of engineering work. If you can’t test it in 2-4 weeks, break it down or pick a different hypothesis. Velocity matters more than perfection.

Treating Strategies as Commitments: Continuing to build out a strategy after the test shows marginal results because “we already started”. The whole point of the framework is to fail fast on bad hypotheses. If your test doesn’t hit success criteria, kill it and move to the next one.

The teams that make this work are ruthlessly focused on small nodes, specific hypotheses and fast tests. The ones that struggle are trying to boil the ocean.

Your Next Steps

Here’s your challenge: Pick one metric from your KPI tree that’s been stuck for the past two quarters. Run it through the Strategy Bridge this week:

Choose 1-2 high-impact metrics where you have direct control

Write down 3-5 influence hypotheses for each metric

Score them on Impact × Confidence ÷ Effort

Turn your top 2 hypotheses into complete strategies with success criteria

Ship your first test within 7 days

Not next month. Not after the next planning cycle. This week.

The difference between KPI trees that collect dust and ones that drive decisions isn’t the quality of the tree, it’s having a systematic process to turn measurements into testable strategies.

You built the diagnostic tool. Now use it.

What’s one metric from your KPI tree that’s been sitting in your quarterly review deck for the past six months? What’s your hypothesis about what might actually move it?

This is the second post in a series about turning metrics into action. Part 1 covered building KPI trees that pass the Tuesday Afternoon Test.

Part 2 (this post) shows you how to turn those trees into strategies.

The system only works if you do both.

Thanks for reading RoadToPM! make sure to subscribe to stay up-to-date ✨✨✨